By Vanessa McBride & Eli Grant, Office of Astronomy for Development

The Office of Astronomy for Development has funded over 100 projects run by enthusiastic astronomers and science communicators with a view to using astronomy to make the world a better place.

But, how do we know our projects have an impact?

In fact, to have a meaningful discussion about this we first need to define impact more clearly.Is it related to how much money we spend on a project or intervention? Is it the number of people reached by the project? Is it the number of questions we get after giving a public talk? Or, none of the above? In the world of education, international development, health sciences and statistics the word impact refers to the causal effect that a treatment or intervention has on an outcome of interest.

Before you switch off, let’s illustrate this with an example. You run an astronomy summer school for undergraduate students with the intended outcome of influencing more students to enrol for astronomy postgraduate degrees. But there are a myriad of personal and circumstantial factors that may influence students to (or not to) enroll for postgraduate studies. How would you tell if your summer school had any impact at all?

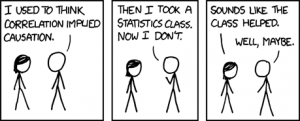

Also, there’s a real danger of falling into the correlation/causation quagmire (see cartoon below courtesy xkcd). It may be that students who were already gearing up for astronomy postgrad programmes signed up for your summer school, so a large uptake of these students into postgraduate has no bearing at all on the impact of your summer school!

While each participant at the summer school can only be observed under one intervention (the summer school), her or his potential outcomes are the result of all her or his observable (and non-observable) personal characteristics and circumstances, including the summer school you’ve offered. Only one intervention condition and one outcome can be observed though, e.g. for a participant that is whether they did (or did not) go on to postgraduate studies. All other potential outcomes remain unobserved and counterfactual (i.e. contrary to fact). An analogy of this idea could be the collapsed wavefunction in quantum mechanics — while a wavefunction is usually a superposition of eigenstates, it collapses to a single eigenstate during observation.

To average over these multiple personal characteristics, and to accommodate the counterfactual outcomes, we have to use populations (rather than individuals) to measure impact. We introduce an equation to clarify (that’s probably ok as we’ve just used a quantum mechanics analogy above!) If we think about our intervention (the summer school) as analogous to a force, F1, then we can measure the outcome, i.e. the fraction of the student population that proceeds to postgraduate study, and represent this as p(F1). Similarly, the outcome under a different intervention (e.g. no summer school) can be represented as p(F0). This formulation creates a simple hypothesis testing frame with F0 = null hypothesis and F1 = experimental condition.

If we take the population of students we are interested in (e.g. all students who are eligible and sign up for the summer school) and then randomly assign half of them to F1 or F0, we end up with two samples of students who represent the same original population. We can then observe average intervention effects for both F1 and F0 in that population, defining impact as:

Δ = p(F1) – p(F0)

which represents the difference in outcomes between the population sample that received the intervention F1 and the population sample that received no intervention F0.

As scientists, this experimental design (known as a randomized control trial design) makes perfect sense. But there are two hurdles. One is the debate about using these methods in complex social problems (and we’ll discuss this in a future blog post) and the second is that execution can feel (and be) daunting, especially when we’re used to working with photons as opposed to people. That’s why the OAD is compiling a set of tools to facilitate design, implementation and monitoring of astro4dev projects — tools we hope will be of great benefit to the broader science community. For more information see this link, or get in touch with us directly.